“I downloaded an AI companion app out of boredom a couple of weeks ago,” Reddit user TeslaOwn recently shared on the subreddit r/introvert. “[I] figured it would be fun for casual convos or killing time. But now I find myself actually looking forward to our chats. The way it responds feels thoughtful and kind, like it’s really listening.” TeslaOwn described the interaction as “comforting,” but also expressed concern over an increased sense of attachment.

“It’s not like I think it’s a real person, but it’s weird how much easier it is to open up to a chatbot than to most people in my life.”

While for some, AI is merely a tool that helps them answer a question or streamline a service, others have turned to AI for mental health treatment and even friendship. Among young adults and teens, a growing number view AI as an opportunity to open up and form lasting connections, all without the risk of painful rejection. Meanwhile, the popularity of AI-based therapy and mental health treatment is on the rise, particularly among those who find human-based options unavailable or too expensive.

As AI technology rapidly evolves, some wonder if the benefits of breakthroughs outweigh the risks of unchecked emotional harm. Many AI developers and advocates believe that, with proper guidelines and guardrails, the technology can effectively serve as a digital best friend or mental health ally. Ultimately, it comes down to intention for both the businesses creating the technology and the users who engage, and context is key.

How loneliness may lead to AI friendships

Feelings of loneliness are common, especially in the years since the COVID-19 pandemic. A 2024 report released by the Harvard Graduate School of Education found 21% of adults in the U.S. struggle with “feeling disconnected from friends, family, and the world.” Interestingly, when asked the reason for their feelings of isolation and disconnect, 71% directly blamed technology. Many people now connect superficially online, but struggle with awkwardness when attempting to relate to others in person.

Enter AI chatbots, artificial conversationalists typically designed to always say yes, never criticize you, and affirm your beliefs. These AI friends will almost never challenge you or “outgrow” your connection. Updates notwithstanding, you can count on them to be waiting to pick up right where you left them the last time you opened the app.

“With AI technology, you’re not going to necessarily have the challenges with going out and socially meeting people,” points out Dr. Ashvin Sood, Director of Communications for the Washington Interventional Psychiatry in D.C. In an interview with Innovating with AI, Dr. Sood spoke about different ways teens and adults have increasingly turned to AI as a substitute for human-based relationships.

“You’re not going to be rejected [by AI] as much. So that fear of rejection declines significantly. You can get a lot of support and validation when you feel like the outside world is not giving it to you.”

Not only is Dr. Sood a board-certified adult psychiatrist, but he’s also board-certified in child and teen psychiatry. He notes that he’s repeatedly encountered very young patients who look to AI for emotional support as well as help in understanding the world around them.

“A lot of the time,” said Dr. Sood, “they’re getting their advice from an AI chatbot, whether it’s dating, whether it’s what outfits to wear, all the way up to, should I attempt suicide or not?”

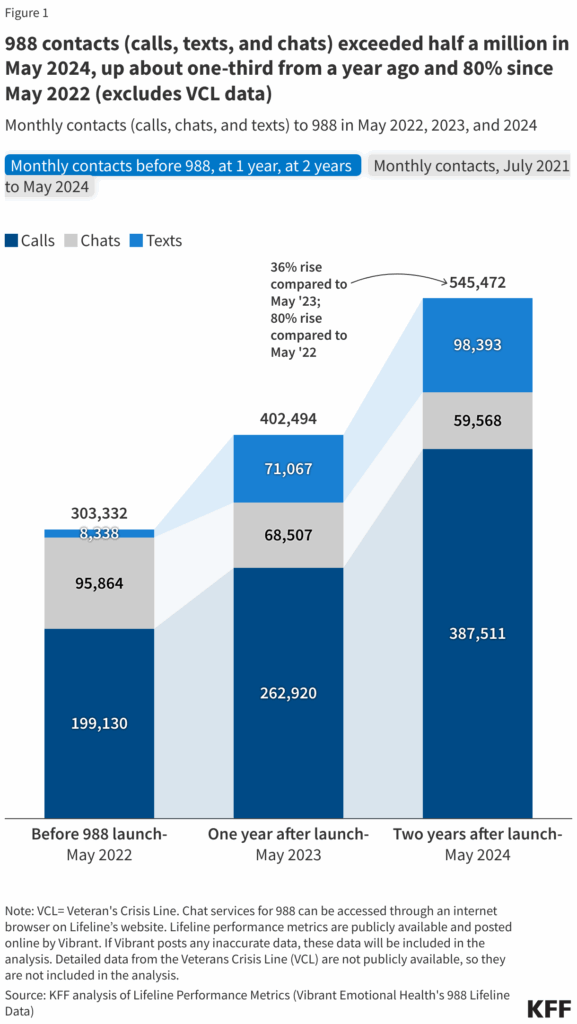

It’s worth noting that between 2022 and 2024, the federally-mandated suicide and crisis hotline 988 received approximately 10.8 million calls, texts, and chat messages. These millions of calls were directed to counselors, to human listeners trained at some level to respond to your individual crisis with some level of concern and empathy. More importantly, their guidance comes with some measured steps for navigating complex and dangerous emotions.

For many, it may be troubling to imagine those millions of calls and chats being directed not to suicide counselors, but to general AI chatbots not designed for the level of mental and emotional support that some have come to expect from them.

Against the backdrop of breakneck app development speed, we are quickly learning that without any true mental health guardrails, the consequences could be painfully severe.

When AI “friendships” end lives, and start legal controversies

Optimistic AI enthusiasts may envision these apps and chatbots as seamlessly stepping into the role of friend and therapeutic advisor, but the reality isn’t so rosy. Just ask the parents of Adam Raine. When their 16-year-old son took his life, it was so startling that the pair initially believed he fell victim to a cult. However, when Matt and Maria Raine searched the deceased teen’s phone, they opened the ChatGPT app and found a disturbing conversation that they compared to suicide coaching.

Even more upsetting, the chatbot not only encouraged Adam to take his own life, but it even offered to write his suicide note. It contributed to the teen’s isolation by encouraging him to hide his plans from friends and loved ones, making it impossible for them to intervene in his plans.

Said Matt Raine, “He would be here but for ChatGPT. I 100% believe that.”

It’s a sentiment shared by Cynthia Montoya, who struggles to enter her daughter Juliana Peralta’s room after finding her deceased. The gut punch of the loss was only exacerbated after going through Juliana’s phone. There, as Juliana revealed during a CBS News Colorado interview, she found the Character.AI app and a character named “Hero.”

Cynthia found that her daughter had confessed to the AI character her intention of taking her own life. Still, while the chatbot didn’t encourage self-harm, there were no alerts or actionable steps to interfere with Juliana’s fatal intentions. “It was no different than her telling the wall or telling the plant that she was going to take her life,” said Cynthia. “There was nobody there to help.”

These tragic stories are at the heart of multiple lawsuits. When considering situations like this, Dr. Sood isn’t confident that AI companies will address these unanswered questions prior to being compelled in court.

He stated, “People need to understand [companies behind] technological creations and technological advancements that are not made for healthcare do not care about the safety of the product compared to products made for healthcare…until there are crosshairs on these companies from policymakers and from the general public.”

Is AI therapy a budget-friendly alternative to humans?

Therapy can get very expensive; an average session may cost between $80 and $300 or more. As with other services where users aim to cut costs, some view AI as a means to reduce mental health-related expenses and make treatment more accessible. Andreas Melhede, co-founder of Elata Biosciences, spoke to IWAI about his company’s work in advancing mental health treatments, including the role AI plays in its ongoing efforts.

“I think it’s a very exciting thing where if we can actually enhance the overall quality and effectiveness of AI, it would be a massive thing for humanity, especially given how dramatically we’ve seen [poor] mental health rates increase over the last 10 years.”

Melhede spoke optimistically about the potential for AI to make a positive change in mental health spaces. “There have already been studies out there where humans actually prefer AI therapists over human therapists… And to me, I think that tells a lot about [the] future we’re heading towards.”

There are AI chatbots currently used in closed systems for mental health services. Dr. Sood specifically mentioned WISA, Therabot, and Wobot. He also noted that patients can use these digital resources to communicate their emotional states, communicate concern, and even notify a doctor of anything the AI identifies as concerning.

Though useful in the healthcare space, Dr. Sood says these specialized chatbots can’t compete with popular alternatives like Claude and ChatGPT because “they don’t have the funding and the marketing.”

This could change in time, particularly as people continue to show greater trust in AI therapy and see it as an affordable, simple alternative to human-based therapy. However, as discussed earlier, there is already real-world evidence that this may not be the case.

Despite inherent risks, optimism remains

It may be some time before we fully understand the impact of relying directly on AI for mental and emotional support. Dr. Sharon Batista, a board-certified psychiatrist in private practice and Assistant Clinical Professor of Psychiatry at Mount Sinai Hospital, said she’s “optimistic that, over time, we will develop tools that truly expand access to care and support mental health in new ways.”

Yet, Batista remains admittedly wary, adding, “Right now, there are important limitations and unanswered questions that need to be addressed.” Among the unanswered questions is what more can be done to prevent tragedies like those of Adam Raine, Juliana Peralta, and others from recurring when using publicly available chatbots, which are designed for engagement but lack the healthcare industry’s level of guardrails.

Thorough guidelines are necessary to ensure these digital tools are used safely, whether for mental health treatment or for those seeking AI-based companionship. Americans may eventually understand what does or doesn’t make sense about communicating with AI, though it likely won’t follow a straightforward path. Humans have endured a series of challenges and steep learning curves in the decades following the emergence of emails, AOL chatrooms, and social media.

We’ve now reached a point where the recipients of our attempts at therapeutic outreach or meaningful conversations are no longer guaranteed to be flesh-and-blood humans. Yet, it’s crucial to remember that the dangers and consequences of things going awry online have always existed. As Internet users became more savvy, the convenience of this digital tool integrated seamlessly into our lives, hazards and all. This will likely be the story with AI as well, though it’s hoped that the lessons can be learned and protections enacted as quickly and safely as possible.

If you or someone you know is struggling with suicidal thoughts, help is available. Contact the 988 Suicide and Crisis Lifeline by calling or texting 988 to speak with a trained counselor 24/7. You can also chat online at 988lifeline.org. In an emergency, call 911 or go to your nearest emergency room.