When it comes to AI adoption, we’re basically living in two parallel universes at the same time.

Universe 1: AI is rapidly reshaping the economy and work as we know it

Universe 2: AI is basically useless and wasting a lot of money

For example, in Universe 2…

The Atlantic asks, “Are we in an AI bubble?” (including lots of links to studies saying AI is not useful). The New York Times says, “Companies Are Pouring Billions Into A.I. It Has Yet to Pay Off.”

And meanwhile, back in Universe 1…

Salesforce says it laid off 4,000 of its 9,000 customer service reps because AI has made them unnecessary ?, and Derek Thompson writes that “The Evidence That AI Is Destroying Jobs For Young People Just Got Stronger.”

So, depending on who you ask, AI is either doing nothing or changing everything.

You can probably guess that my experience at my own companies – adopting AI at my software development firm and seeing 1,000+ students build AI consultancies here at IWAI – places me firmly in the “reshaping the economy” camp.

But I also see a ton of news, studies and opinions from folks who are living in Universe 2 – so today I want to explore the other side of the looking glass.

Even for AI enthusiasts like us, most of whom are seeing clear benefits from incorporating AI into our daily work, understanding what’s happening in the “outside world” is crucial. One big reason I stress this: almost all of my IWAI students are shocked by how many pure AI novices they encounter – even when they go to conferences or meetups that are ostensibly about tech and AI!

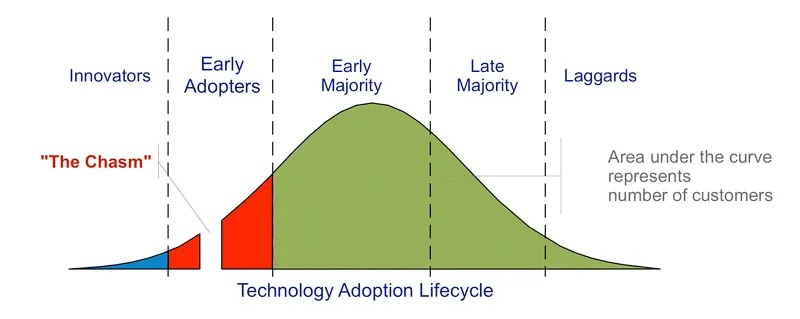

Even though the number of folks in Universe 1 is growing, we’re still waaaaaay on the left side of the tech adoption curve for serious AI workflows:

So, let’s explore what’s behind the “AI is a nothingburger” theme by looking at the two major camps: The Skeptics and the Slowpokes.

The data is messy, and also real

First, I want to acknowledge that the data on both sides is real. Unlike in some other areas of life, we’re dealing not with misinformation, but instead with folks looking at the same situation through different prisms, and seeing a very different image of reality as a result.

My basic view is that the studies we see about folks rejecting or failing at AI adoption are more a result of pre-existing structural problems or perverse incentives within organizations, rather than a fault of the actual AI tools available to the organization.

The Skeptics: Journalists are attracted to negative or counter-trend stories

I started my career in journalism, so I feel comfortable saying that when something directly affects the career of a writer, it is really hard to distinguish between it actually being very important and it just being something that writers really enjoy talking about.

In other words, the fact that AI is extremely salient for people whose paycheck relies on being a good writer is one reason that we see a lot of AI skepticism in magazines and newspapers. These folks aren’t lying, but the reality is that magazines and newspapers are created by regular people in a room pitching ideas, and if you are a regular person whose job is clearly threatened by Claude Opus 4, it is likely you will end up with a lot of pitches for articles about how AI sucks at writing (and, by extension, why professional human writers are awesome).

A corollary to this is the recent controversy at DragonCon (a fan convention in the same vein as ComicCon) over the sale of AI art. There are a lot of folks at DragonCon who highly value the creative art that they and their fellow attendees create. Someone showing up and selling AI art at a booth was not only threatening to their values (one of which is the celebration of human creativity) but also at least an indirect threat to some of their livelihoods. Something very similar is happening to writers and journalists right now, and that inevitably creates a situation where the editorial calendar is weighted toward anti-AI articles.

Some journalists, like Derek Thompson (whose post I mentioned above), are able to show us a longer arc, where they start out skeptics but also change their mind when presented with new evidence. But Thompson benefits from being a relatively famous guy with a big platform, so people like me can read his thoughts every week and see him evolve. The typical non-famous journalist is more likely to write one article at a time on unrelated topics, so we end up with a lot of anti-AI posts with less context, which sometimes makes it feel like your feed is full of reasons why AI is a bubble that is soon to burst.

I think we need to look at basically every news article (and underlying study or paper) through this lens – the folks who are editing and curating the major publications are naturally attracted to contrarian stories, because surprising things are more fun to read.

However, contrarian attitudes are not always accurate reflections of reality. It is also important to consider the possibility that the obvious thing is true: writing, researching and coding 3x-10x faster, without sacrificing much quality, makes a big difference for many people’s jobs.

(At IWAI we call this “better, faster, cheaper,” and it is a lot of fun to see our students make it reality at company after company.)

The Slowpokes: Enterprises with lots of bureaucracy are adopting AI slowly and choppily

I went to an immersive Titanic experience this summer and learned that big ships with tiny rudders are bad at turning, sometimes resulting in their demise. This is a useful way of thinking about the big companies struggling to adopt AI.

McKinsey says that eight in 10 companies see “no significant bottom-line impact” from AI adoption, and M.I.T. similarly says that “95% of AI initiatives at companies fail to turn a profit.” These studies have gotten a ton of mileage in mainstream news and magazine publications, in part because they counter the common wisdom.

So, what’s going wrong with these companies who are struggling to profitably adopt AI?

I think the biggest differentiator is likely the level of existing bureaucracy and red tape within the company when AI adoption starts.

When I am training AI consultants, I almost always tell them to focus on small and mid-size businesses in the 5-100 employee range. Bigger than that, and the ship is so big that it’s almost impossible to turn unless you have a very tech-forward CEO. And even then, it is a rocky road because “optimization” often means AI-induced layoffs, like what we’ve seen at Salesforce and Microsoft.

I suspect that most failed AI adoption initiatives suffer from three major problems:

- A lack of direction (i.e. employees are being told to do something with AI, and they are essentially “performing” AI adoption without really caring)

- A sense among employees that adopting AI is antithetical to their own career growth

- A slow feedback cycle – at a large company, “looking like you’re doing something” to adopt AI is effectively indistinguishable from actually doing something, at least for the first year

In other words, everything moves slow, it’s really hard to verify if people are on the right track with how they want to implement AI, and it’s super easy for people to “slow-roll” adoption if they think it will preserve the status quo (i.e., their current level of job security) for a while longer.

As with the journalists and creative folks, nobody has to be doing anything malicious here – but because they’re incentivized to keep the status quo in place, it’s very easy for everyone to move a little bit slower or be a little bit more skeptical, adding up to an institution-wide failure to adapt to rapid change.

Incentives are everything

In short, the incentives within organizations are a huge factor in whether their teams end up in Universe 1 or Universe 2.

So if you want to adopt AI (or help your clients adopt AI) successfully, addressing perverse incentives by creating an opportunity for everyone to benefit from efficiency is a key first step. If you have a bunch of staff who think AI is going to replace their jobs and degrade their quality of life, you are bound for a very difficult road to AI adoption.

At the same time, the slowness of adoption among the Skeptics and the Slowpokes is a major competitive advantage for those of us already in Universe 1. To paraphrase Warren Buffett:

“The future is never clear; you pay a very high price for a cheery consensus.”

In other words, early adopters are often able to achieve unusually good financial outcomes by virtue of “getting in” early. We’re already seeing this with our 1,000+ consultancy students, and the slowness of adoption among the masses means early adopters will continue to have outsize opportunities for years to come. And by contrast, those in Universe 2 will pay “a high price for a cheery consensus” if they wait years to get their AI adoption incentives right.